Improving my Solar Data Collection

Using TypeScript and Bun to collect data from Deye and Fronius inverters.

I also didn't see a reason why a third party should also have that info, and with a home server on hand, why not collect all the data locally?

System 1: Deye

One of the systems is built around a micro inverter by Deye. It has a very clumsy management interface and no HTTP API. However it does have Modbus TCP, a common protocol in industrial applications and exposes most interesting metrics over this interface. Unfortunately I don't have experience with this protocol, and I didn't need it either, since this brand of inverter is quite popular and someone already did the heavy lifting, lucky me.

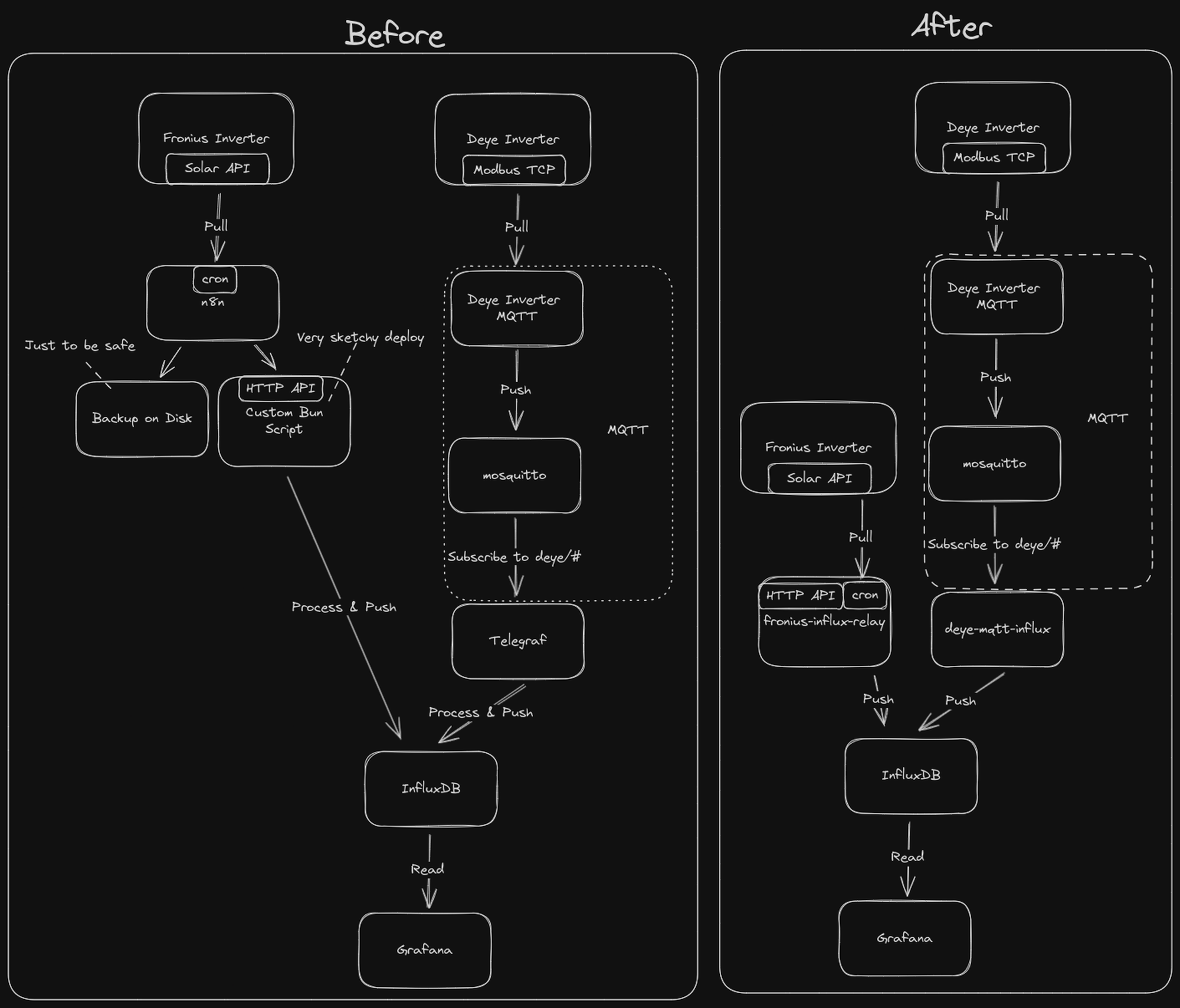

Enter deye-inverter-mqtt, a tool that queries this Modbus interface and forwards the data via MQTT. MQTT is another protocol I hadn't worked with before. It's commonly used for IoT and home automation applications. After quickly reading up on it I set up mosquitto (a broker for MQTT) in a Docker container, added Telegraf on top of it (a data collection agent) and InfluxDB (a time series database) to get up and running quickly. Now I had 1 new protocol and 4 new tools to learn.

System 2: Fronius

System 2: Fronius

The other system is built around an Fronius Symo GEN24 inverter. It's quite a bit more substantial and has much better documentation, lucky me yet again. This inverter actually has a usable HTTP based API hosted on the inverter itself. That's more of my comfort zone. I wrote a quick script in TypeScript, which I deployed in all the wrong ways to my home server to save whatever data the inverter spits out into the InfluxDB and scheduled the querying with n8n, a low code automation platform - throwing in a backup to disk for good measure.

Done! ... right?

Done! ... right?

This got me to the point where I could collect data and didn't have to stress about things going horribly wrong. I kept this setup for a few months, until my urge to rewrite everything periodically kicked in.

So what were the actual issues with this approach?

Sketchy Bun Deploy

The TypeScript project was really not deployed well. I used a Bun Docker container for the runtime, but didn't create a proper image for it, I just shoehorned the default image do run my code, but that involved manually using rsync every time I wanted to change the code on the server.

Unnecessary amount of Docker

I now had 5 Docker containers running just to get some data into a DB. That seemed excessive to me. The fact that I didn't know how to operate them properly didn't make things any better. I felt like I could get that amount down by a minimum of 1, maybe 2.

I don't get along with Telegraf

The theory behind Telegraf as a tool seems very neat. Just having one place to define all your data collection logic and have it transform the data for you automatically. My issue with it was that I kept running into issues solving seemingly stupid issues like parsing the MQTT payloads to the proper data types for the DB and thinking: "I can do that in a few lines of code". Also the data format that the mqtt consumer for Telegraf used to save the Deye metrics to the DB was not ideal, since it put every measurement into a separate data point. However I'm sure this is all down to me not configuring it properly, and I will revisit Telegraf at some point, but that's for a future project.

Doing it right (Deye MQTT)

As you probably expect by now, this story has at least one chapter for replacing Telegraf with something custom. This is that chapter.

We want to save all ~30 metrics extracted by deye-inverter-mqtt to be saved in a single data point in Influx. The challenge is that those metrics get sent one-by-one via MQTT. What we need to do is identify what MQTT messages belong together, parse the values properly, bundle them up in a neat package and save them to Influx.

I defined 1000ms as the cutoff from the first message to the last message in a bundle. Whenever there is a message I check if the bundle has already reached this cutoff age and create a new one if it has, also scheduling the new package to be saved to the DB after the same cutoff duration already. That way I don't need to risk empty bundles being saved by using setInterval and I don't have the bundle stuck waiting to be saved until a new bundle is created.

const bundleTimeoutMs = 1000;

let bundle: Bundle;

mqttClient.on("message", (topic, message, packet) => {

console.log(`message in ${topic}: ${message.toString()}`);

const messageTs = new Date();

if (

!bundle ||

messageTs.getTime() - bundle.timestamp.getTime() > bundleTimeoutMs

) {

// initialize a new bundle when necessary

bundle = { timestamp: messageTs, entries: [] };

setTimeout(async () => {

// save new bundle to influx after our specified timeout

await saveBundle(bundle);

}, bundleTimeoutMs);

}

bundle.entries.push({ topic, message: message.toString(), packet });

});This was wrapped up into a proper Docker Image this time, using the excellent low friction option of a GitHub Action with their own Container registry, following along with this great piece of documentation for the Github Action and the Bun docs for the actual Dockerfile.

Overhauling Fronius Collection

Armed with the knowledge how to publish my own Docker images I proceeded to overhaul the Fronius side of the chain too. By now I was confident enough in the stability of the Bun runtime to trust it without the additional backups to disk. Also to achieve the goal of cutting down on the amount of Docker containers we're going to get rid of n8n in the stack too, since I really only need to schedule a cron to run every minute. We can do that in our code too.

I kept the API for accepting the payloads from an external source in place to play nicely with other automation applications.

Result

Wrapping this all up, I am now left with 1 less container, 2 projects that could be helpful to other people (everything is open sourced on Github), a much better understanding of my own home server infrastructure, better data quality, and a new blog article. I'll call that a success for a weekend project.

Future

Future

The new structure also allows me to trivially add new parts to this system should I decide to go down the path of MQTT further. A potential setup with Home-Assistant could look like this, where another piece of custom code queries the data from Fronius and ingests it to the InfluxDB using the relay's HTTP API, and also publishing the data via MQTT.

However at the moment I rather see my ideal scenario getting rid of MQTT as a whole instead in this flow, since I'm not into home automation (yet). That would involve me gathering the data via Modbus, which sounds like a nice challenge for another weekend.

However at the moment I rather see my ideal scenario getting rid of MQTT as a whole instead in this flow, since I'm not into home automation (yet). That would involve me gathering the data via Modbus, which sounds like a nice challenge for another weekend.

Links

The excellent deye-inverter-mqtt project: https://github.com/kbialek/deye-inverter-mqtt/

My adapter for bundling the single MQTT messages into a single InfluxDB Data Point https://github.com/GerritPlehn/deye-mqtt-influx

My cron controlled Fronius crawler and InfluxDB relay https://github.com/GerritPlehn/fronius-influx-relay

InfluxDB https://www.influxdata.com/

Excalidraw used for graphics https://excalidraw.com/

Categories